In the current era of remarkable technological advancement, a standout development has been the emergence of generative artificial intelligence (generative AI). This specific subset of AI generates new content to answer almost any request. All it needs from its user is a few words of text.

Each instalment in the Working with Artificial Intelligence Series delves into a specific use case, illustrating how generative AI can be effectively used in various scenarios. We investigate the most suitable tools for each task, reveal the most effective prompts, and share insider tricks to speed up the journey from input to ideal output.

Remember that when using AI tools, especially generative ones like ChatGPT, following best practices is essential. Reviewing and following the Guide on the use of generative AI ensures responsible and ethical use of such technologies.

This article explores the role of the user in ensuring reliability and trustworthiness in tasks using generative AI, and will cover:

- what reliability and trustworthiness are in generative AI

- the FASTER principles

- the capabilities and limitations of generative AI

- five practical approaches

Ensuring reliability and trustworthiness in generative AI

Generative AI is becoming mainstream and commoditized, for example with Microsoft Copilot, which incorporates ChatGPT-like functionalities into popular applications like Word, Excel, PowerPoint, and Outlook. We’ll increasingly see AI integrated into day-to-day tools, with the promise of boosting efficiency and productivity. However, successful implementation of these tools depends on two important factors: reliability and trustworthiness.

- Reliability means consistently receiving relevant responses, which is essential for practical usage.

- Trustworthiness means you can trust, or have the means to verify, the accuracy, completeness, and appropriateness of the tools’ outputs.

As AI spreads across different sectors, it’s crucial to critically evaluate and strengthen these aspects, recognizing them as not only technical requirements but also ethical responsibilities.

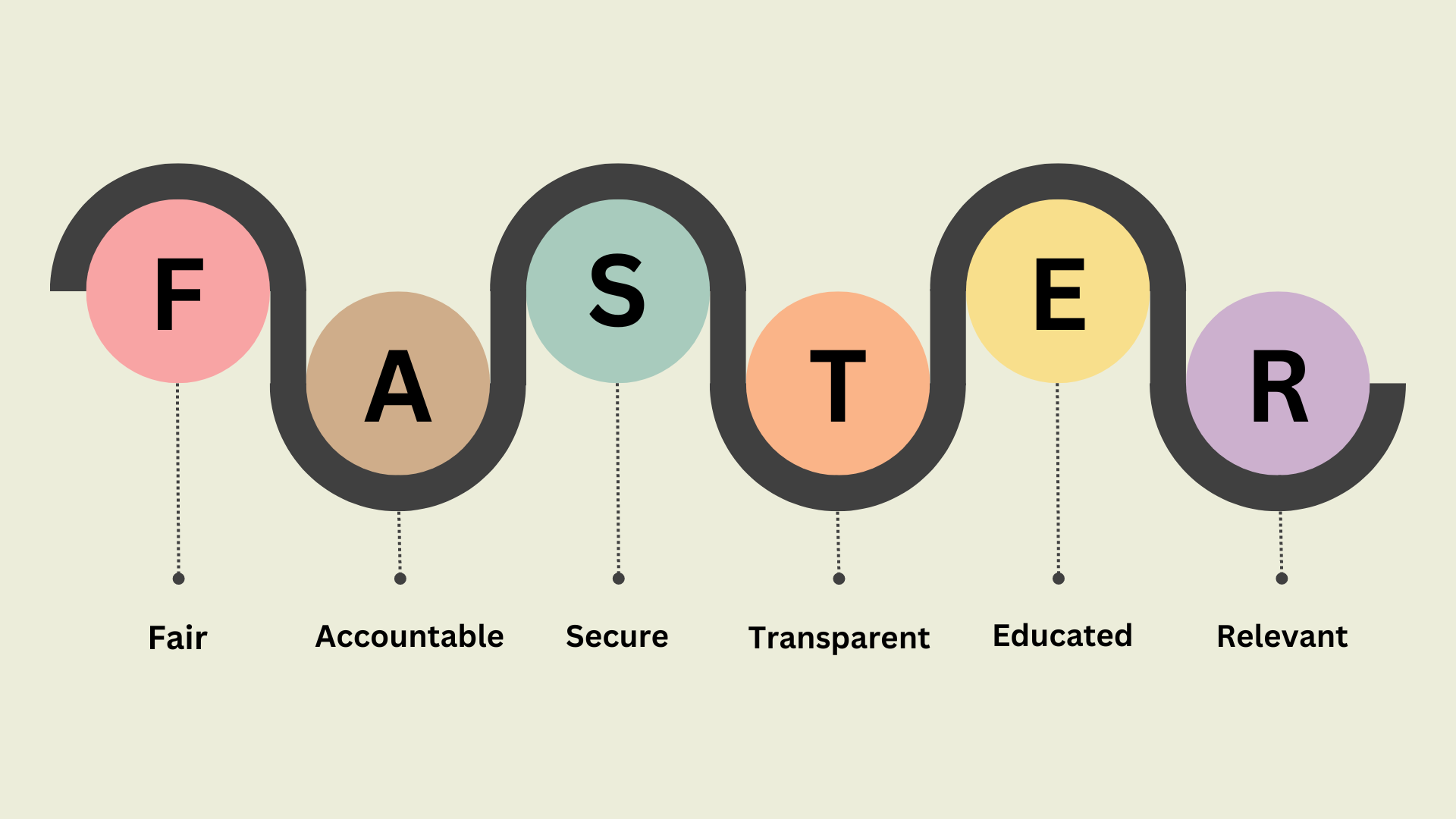

Introducing the FASTER framework

Descriptive text

The image features a visual representation of the acronym “FASTER” arranged in a wavy line. Each letter is enclosed within a circle and connected by dotted lines to its corresponding attribute label. Moving from left to right, the sequence begins with "F" in a pink circle labelled "Fair," followed by "A" in brown for "Accountable," "S" in blue for "Secure," "T" in orange for "Transparent," "E" in yellow for "Educated," and "R" in purple for "Relevant."

The Treasury Board of Canada Secretariat’s FASTER principles—which stands for fair, accountable, secure, transparent, educated, and relevant—provide a comprehensive framework for enhancing generative AI reliability and trustworthiness and support its responsible use. They provide a set of guidelines designed to address its unique challenges. By adhering to these principles, you can deploy and use generative AI in a manner that is ethical, transparent, and accountable.

Select each component of the FASTER framework to uncover the steps you can take to align your use of generative AI with these guiding principles.

- Carefully review the outputs created by the tool, as these tools can produce biased or discriminatory outputs.

- Ensure that your prompts and interactions do not perpetuate stereotypes or unfair portrayals of individuals or groups.

- If you notice any biases in the responses, consider reporting them to the platform for improvement.

- Verify the information provided by generative AI tools, especially for critical decisions, as it might not always be up to date or entirely accurate.

- Use these tools ethically and within legal boundaries. Avoid prompts that encourage or involve illegal activities, misinformation, or unethical behaviour.

- Remember that you are responsible for how you use the information generated and for letting your manager know about your use of these tools.

- Ensure that you understand the privacy policy and data handling practices of the platform.

- Don’t share personal information or protected data in your prompts, as this can pose privacy and security risks.

- Use these tools in a way that respects the privacy and security of others.

- Be aware that you are interacting with an AI and not a human. This awareness is crucial for setting realistic expectations regarding the nature and limitations of the responses.

- If using outputs from generative AI in a public or professional setting, it is good practice to disclose that the content was generated by an AI.

- Familiarize yourself with the capabilities and limitations of each tool, since they are all different. Understanding what each tool can and cannot do will help you formulate effective prompts and interpret the responses correctly.

- Stay informed about advancements and updates in AI technology to use these tools more effectively.

- Only use these tools for tasks where you have the expertise to validate the responses.

- Use generative AI tools in scenarios where they add value and are appropriate. For instance, they are great for idea generation, explanations, language learning, and creative writing.

- Recognize situations where human judgment is crucial and understand that AI tools may not be suitable for every task, especially those requiring deep contextual understanding or ethical considerations.

The introduction of the FASTER principles by the Treasury Board of Canada Secretariat is a significant step towards establishing a standard for responsible AI use in the Canadian public sector.

Generative AI capabilities and limitations

While each aspect of FASTER is crucial, “educated” stands out as the central element in ensuring reliability and trustworthiness in generative AI tools. Awareness of the limitations in AI-powered tools can help mitigate many of their weaknesses, including specific constraints that can affect their reliability and trustworthiness. Here are a few key examples of generative AI limitations:

- Data timeliness: Training data often has a cutoff point, meaning the AI does not have access to or knowledge of events, developments, or research published after that date. This can lead to outdated or incomplete information.

- Infringement of intellectual property: Using or reproducing the outputs generated by these tools could potentially infringe on intellectual property rights if they contain material identical or substantially similar to a copyright-protected work.

- Understanding nuance and context: While advanced, generative AI sometimes struggles with understanding nuanced language, sarcasm, or context-dependent information. This can lead to misunderstandings or misinterpretations.

- Lack of personal experience: Generative AI lacks the ability to understand emotions or personal nuances, so it may not fully grasp or appropriately respond to questions requiring empathy, personal judgment, or lived experiences.

- Overgeneralization: The tool might make overgeneralizations based on the patterns it learned from the training data, and this can lead to statements that are too broad or simplistic for complex topics.

- Bias in training data and models: The training data and models may contain biases, and generative AI might unintentionally reproduce or intensify these biases when generating responses, leading to possible biased outputs.

- Factually incorrect or misleading information: Despite its vast training data, generative AI can sometimes provide information that is incorrect or misleading, especially in areas where the data is limited.

- Limited deep expertise: While generative AI can provide general information across a wide range of topics, it may lack deep, expert-level understanding in specialized fields.

- Inability to verify or access external data: Generative AI cannot verify information against external databases or access paywalled, proprietary

Five approaches for ensuring trustworthiness and reliability

Now that you understand the importance of reliability and trustworthiness in generative AI, along with the FASTER principles, let's put this knowledge into action. Remember that the user needs to maintain the reliability and trustworthiness of interactions, especially when prompting the AI. Users are also responsible for verifying the content generated throughout the interaction. How can users ensure trustworthiness and reliability in interactions with generative AI tools?

Keep in mind that when we talk about reliability and trustworthiness, we're referring to consistently receiving appropriate, accurate, complete, and relevant responses. We recommend the following approaches:

- Understand how the generative AI you’re using works and the data it’s drawing from

- Add context

- Provide examples, ideally multiple examples

- Ask for the chain of logic

- Consider what standard you need to set for the output

Understand how the generative AI you’re using works and the data it’s drawing from

Choosing the right tool for the task at hand is another key to mitigating the limitations and enhancing the reliability of the output from AI tools. Not all generative AI tools are created equal; they have similarities but are designed for different uses, similar to how cars and trucks are both vehicles but have distinct purposes. Just as you'd choose a truck for heavy-duty tasks and a car for daily travel, it is important to understand the specific job you need to accomplish and select the most appropriate AI tool. For instance, GPT-3.5 may not be the best choice for mathematical tasks, whereas Wolfram Alpha is specifically designed for such applications.

Some generative AI tools only contain data from specific date ranges, so can’t answer questions about current news. Alternatively, Microsoft Copilot (formerly Bing Chat) includes related web search results with user prompts, helping to counteract issues with outdated information and allowing users to verify outputs against the source materials.

Add context

As a tool that provides statistically probable responses, generative AI benefits from additional context, which helps it search for and retrieve the most relevant and valuable parts of its source data and tailor responses to your needs.

As an example, responses will be much more likely to be relevant and trustworthy with the second prompt, rather than the first:

General prompt

How can I organize my email inbox more effectively?

Context-improved prompt

Please provide strategies for organizing an email inbox in Microsoft 365 Outlook (desktop application) with the goal of increased efficiency when searching for invoices, specifically for procurement professionals.

Provide examples, ideally multiple examples

Generative AI tools don’t necessarily have data on how you write or how your organization writes. However, if you provide one or more examples of what a “good” output looks like, the tool can replicate the length, style, and level of detail. For example, if you’re writing a news release about an initiative, providing examples of similar previously approved and published releases will maximize the chance of receiving a high-quality and relevant output.

As always, you should never input sensitive, classified or protected information into tools that operate outside the Government of Canada network. You can, however, include non-sensitive, transactional, or already publicly available examples.

Ask for the chain of logic

Asking for the chain of logic often improves both reliability (the relevance and quality of the output) and trustworthiness (your ability to understand how it was generated and fact check if necessary). This can be as simple as adding “please include, step by step, how you interpreted this prompt and arrived at an answer” in your prompt.

Prompt

I have an extremely busy day packed with meetings, urgent tasks, and looming deadlines. Here’s a breakdown of my schedule and additional tasks:

Scheduled commitments:

- 9:00 to 10:00 am: Strategy meeting with senior leadership team

- 1:00 to 1:45 pm: Team meeting to coordinate upcoming project

- 3:00 to 4:00 pm: Meeting to discuss budget adjustments

Tasks to complete:

- Complete and submit reviews and approval within the next two days.

- Catch up on writing 10 performance reviews that were due last week.

- Complete a mandatory online training session by end of day.

- Prepare a presentation deck for an upcoming conference, which is three days away.

- Schedule a biweekly one-on-one meeting with team members, which should be completed by the end of the week.

- The unit received an ATIP request and the response is due at 5:00 pm tomorrow. This request needs to be analyzed and sent to the teams that are potentially involved, and I need to follow up to ensure it is resolved promptly.

- Respond to an urgent parliamentary enquiry due at 12:00 pm. This request requires that everything else be dropped to be focused on. It needs to be analyzed and sent to the teams that are potentially involved, and I need to follow up to ensure it is answered promptly.

How should I organize my morning? What should I prioritize? Please include a step by step and explain how you interpreted this prompt and arrived at your proposed answer.

ChatGPT response

With such a packed schedule and array of tasks, it’s crucial to organize your morning efficiently to ensure you tackle the most pressing matter first. Let’s analyze the situation and prioritize accordingly:

Assess Priority Levels:

Determine which tasks are time-sensitive, critical, or have impending deadlines.

In your case, the urgent parliamentary enquiry and the ATIP request are immediate priorities as they have specific deadlines.

Allocate Time Slots:

Review your available time slots and allocate them to each task based on priority and estimated time needed. Now let’s organize your morning:

Morning Schedule:

- 8:00 – 9:00 AM: Preparation time Use this hour to review your schedule, prioritize tasks, and gather any necessary materials or information for the day ahead.

- 9:00 – 10:00 AM: Strategy meeting with senior leadership team This is a scheduled commitment and a crucial opportunity to align with senior leadership. Ensure you’re prepared.

- 10:00 – 11:00 AM: Urgent parliamentary enquiry As this is a high-priority task that requires immediate attention, dedicate the next hour solely to analyzing the enquiry and initiating the necessary actions to formulate a response. Ensure to involve relevant teams promptly.

- 11:00 – 12 PM: ATIP request response preparation Address the ATIP request, analyze its content, and begin drafting the response. It’s essential to involve relevant teams promptly to gather necessary information.

Midday Break:

- 12:00 – 1:00 PM: Lunch break

Take a well-deserved break to recharge and refuel for the rest of the day.

By following this schedule, you’ll effectively manage your morning, addressing both scheduled commitments and urgent tasks while ensuring you’re on track to meet deadlines and fulfill responsibilities. Remember to stay adaptable and flexible in case unexpected issues arise.

Consider what standard you need to set for the output

The level of reliability and trustworthiness you need should be a function of the context and importance of the task. If you’re using a generative AI tool to support initial brainstorming about a problem or possible solutions, and will later vet ideas, the standard for outputs can be quite low. If, on the other hand, you’re using generative AI as a support for public-facing content or policy research and advice, the standard is very high, especially when outputs include claims, framing, examples, syntheses, or facts and figures.

This is easy to see for facts and figures: for example, “X% of Canadians support a policy proposal” is a crucial figure to fact check before you include it in official work. Some generative AI tools can consult references, which is known as retrieval-augmented generation, and cite these references. However, depending on the tool, the answer may still not always be accurate, and the tool can fail to take all crucial contextual information into consideration.

Lists of examples, or syntheses of large datasets, may also need to be verified for accuracy even if you’re providing vetted data (that’s non-sensitive or already public) as the generative AI tool could introduce errors. For instance, a list of examples of expert opinions on a policy proposal that omits important critical or supportive stances may be misleading.

When you need to set a very high standard for trustworthiness, even the framing needs to be considered. Because of the examples and data generative AI draws from, it is very common for responses to include phrases like “It’s imperative that governments invest in X,” which is common and unremarkable phrasing, but certainly not neutral in its framing.

In all of these cases, keep in mind your own ability to critically reflect on the outputs and your expertise in the subject matter. If you are not able to verify the output, then it is not appropriate to use generative AI.

This may make it seem like there’s little value in using generative AI, or it’s too much work. However, there are many use cases where generative AI can provide significant time savings with only quick reviews, for example, brainstorming, quick overviews of issues or fields, or drafting or improving correspondence. Still, it’s important to consider the standard of trustworthiness you need for a given task or product.

Conclusion

When we acknowledge and work within the constraints and considerations of generative AI tools, they can become valuable assets. They are particularly adept at summarizing information, answering frequently asked questions, drafting email responses, and even creating simple reports or presentations, enabling workers to focus on more nuanced tasks like strategic planning, client interaction, and project management.

By reading the Guide on the use of generative AI and following the FASTER principles, and by incorporating the practical principles included in this guide, you can manage for AI’s constraints to realize its benefits.

Resources

Enjoying this article series? Read this article next: Working with Artificial Intelligence Series: Co-Coding with AI.